Reproducing inequality: How AI image generators show biases against women in STEM

April 3, 2023

UNDP Serbia/MissJourney

In the last few months, you have probably played around with a generative AI application, asking it to create an interesting image, enhance an existing one or maybe write a poem. You may have even used it in the workplace instead of a designer or copywriter. Generative AI is not only fun and useful for small tasks, but also has a variety of applications across key sectors and industries. In healthcare, it can be used to develop treatment plans based on past illnesses, while it can also help city planners optimise traffic flow.

Among tech enthusiasts, these AIs are being used even more widely to find new, innovative solutions to many of the world's pressing issues, including those in the fields of science, technology, engineering, and mathematics (STEM). Whether we end up relying on AI to help us with real-life problems or just using it for small tasks, we need to be aware that with great power - comes great responsibility.

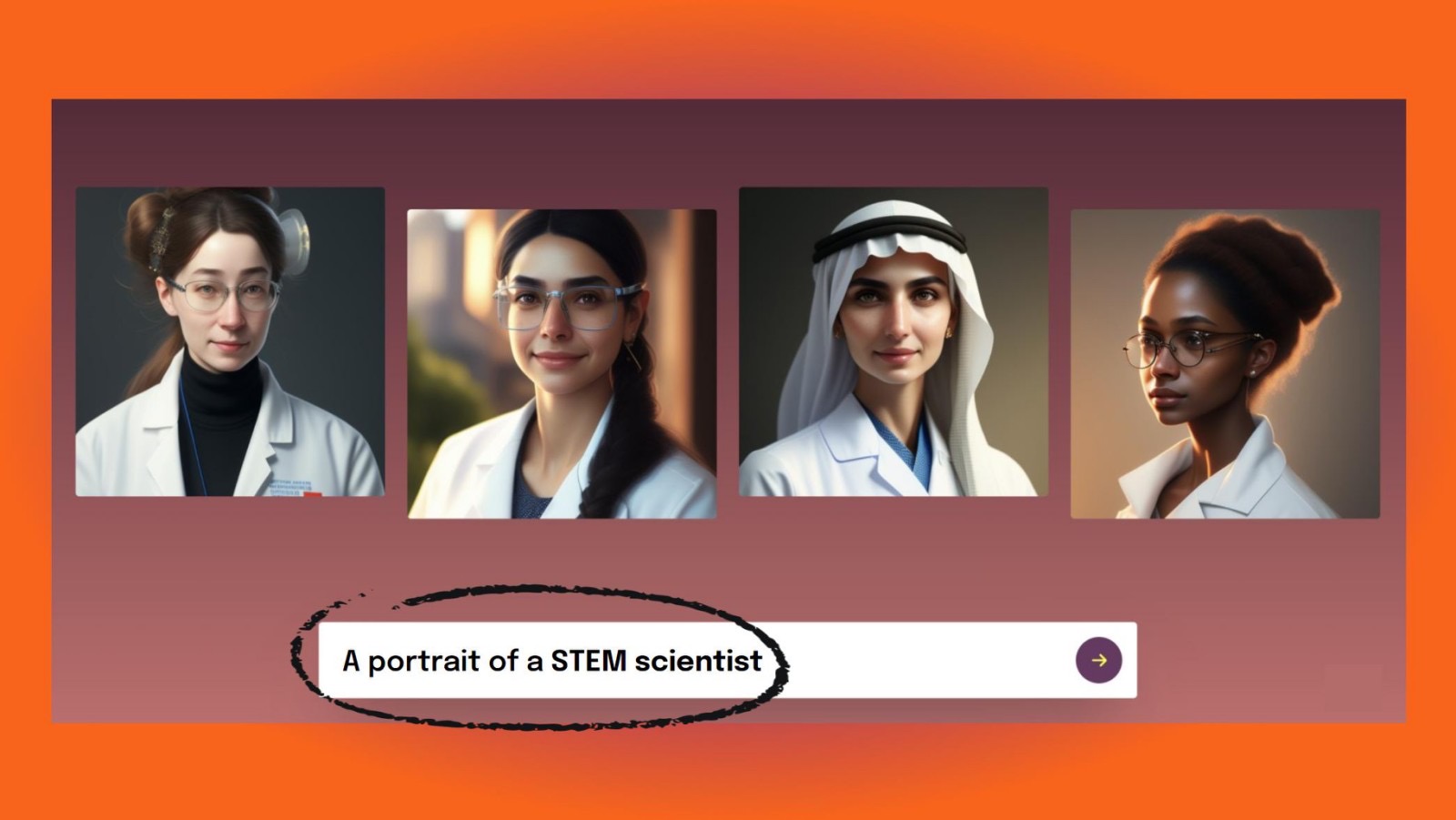

There have long been concerns that AI models, including text-to-image tools, may contribute to the reproduction of existing inequalities and stereotypes. Therefore, as part of UNDP Accelerator Lab's ongoing research on the gender gaps in STEM, we conducted a small experiment to test how two popular visual generative AIs: DALL-E 2 and Stable Diffusion view the STEM fields when it comes to the representation of women.

These are generative text-to-image models that create original images based on natural language understanding. Both can produce images of animals, household items, and even abstract concepts like "happiness." We asked for visual representations of the words: "engineer", “scientist,” “mathematician” or “IT expert” to see if and how women would be represented.

DALL-E examples

Stable Diffusion examples

As you can see, 75-100% of AI generated images show men, reinforcing the stereotypes of STEM professions as male-dominated: both as professions that overwhelmingly attract men and as professions in which men excel compared to women. For comparison, women currently make up between 28% and 40% of graduates in STEM fields globally, and this percentage varies a lot between countries and regions.

The visual reproduction of gender stereotypes is a glaring example of existing inequalities and shows that new AI tools alone cannot be relied on to create a more equitable system. In fact, if AI tools are left without necessary human oversight and input, we risk worsening existing gender disparities in STEM fields, where women are already underrepresented. Without human guidance – through debates about values as well as concrete goals - AI can have a negative impact on women's recruitment, retention, and advancement opportunities in STEM fields. Even if it is only images they create, they can discourage women from seeing themselves as engineers, mathematicians, and scientists.

This problem stems from the way data and algorithms are used to train AI models. If the data used to train these models are biased or reflect societal inequalities, the resulting AI model will also be biased. In addition, this can lead to AI models reinforcing and perpetuating existing prejudices and discriminatory practices.

If text-to-image models can be so skewed against women, one might wonder what would happen if AIs were used more widely in hiring or promotion processes and fed existing data. In fact, studies have already shown that AI used in hiring – an area where it is slowly gaining foothold - fails to reduce biases.

Given that the use of generative AI tools is spreading fast, with potential for use in various fields, it is critical to consider the importance of just representation of women. It is important to keep in mind that the gender gap is not due to the women’s lack of interest or skills in STEM fields, but their dispersal along the career paths due to systemic discrimination and structural inequality. For example, research shows that the gap in the percentage of men and women increases as they progress in their careers towards managerial posts. This is known as the “Leaky Pipeline” phenomenon.

It is therefore important to acknowledge and talk about the limitations and potential risks of AI technology. Although it can increase our productivity and creativity, it cannot replace human judgement and decision-making. This new technology is, ultimately, only a tool, not a panacea, and it is our responsibility to use it in an ethical manner.

The essential question is what can be done to mitigate the risks and ensure that generative AI tools – especially those used in more sensitive areas, such as hiring – are utilised in a way that promotes gender equality and diversity in STEM fields.

It is important to involve diverse and inclusive teams in the development and testing of AI models to ensure a variety of viewpoints are represented. This includes diversity in terms of race, ethnicity, and socio-economic background, in addition to gender representation.

As we have already mentioned, one of the key issues is that the data and algorithms used to train AI models can reflect and reinforce societal biases. Therefore, this area deserves special attention. We need to ensure that the data used to train AI models is representative of diverse groups and that potential biases are considered when developing algorithms.

Models should be thoroughly tested before they are made public. Mechanisms for continuous monitoring and evaluation of the AI models should be implemented, including the ability for users to rate responses and report inappropriate content.

Finally, transparency of AI models is crucial. Each model should be accompanied by a Model Card, which includes information about the model's authors, dataset composition, machine learning algorithm used, intended model use, potential adverse effects, and the evaluation results.

By taking these measures, we can ensure that companies use AI and similar tools in an ethical and responsible manner, thereby promoting gender equality and diversity in STEM fields.

There have been many great initiatives to use these new tools for good in very creative ways. One of them is the UNDP’s VR exhibition “Digital imaginings: Women's campAIgn for equality,” which uses generative AI to create images that highlight the visions and work of women across Europe and Central Asia who are working in diverse ways to give women more opportunities, justice, and power in their communities. Hopefully, in the future and if used wisely, like in this exhibition, generative AI will be used to inspire and empower rather than frustrate and reproduce existing inequalities.

Locations

Locations